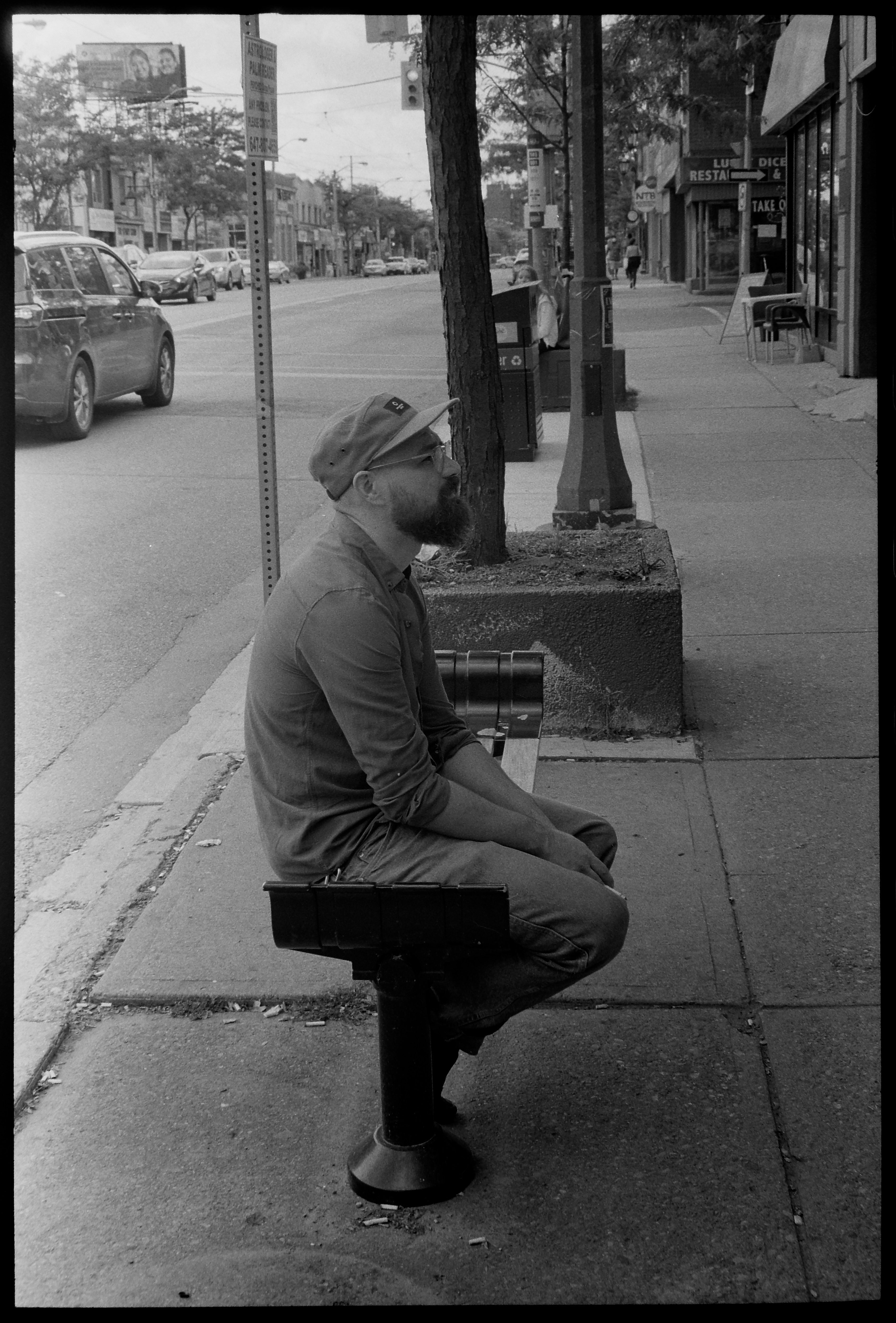

This post is as much about film photography as much is it about the human mind. We begin first with the mind.

Mind

Just like other organs of the body, mind exists in the body as yet another organ. It is special because it makes you aware of the other organs, their needs, and is even aware of its own existence (sometimes even wondering about it). The mind is the fountainhead of the entire human process – it invents philosophies, religion, languages, possesses the ability to imagine, wage wars, make love, preach peace, converse, and develop complex societal constructs like religion and marriage for collective sustenance and so on. It even invented the concept of “blogging” and consequentially, even produced this blog. But sometimes the mind needs care and nurture. Just like an engine of a 2009 Mini Cooper that eats quarts of oil, and sputters and halts during the most intense of trips in the Sierra ranges, the mind collapses from time to time. Too many browser tabs are open in the mind, all the RAM is eaten up. The browser freezes, life freezes. The body tirelessly cranks the engine, hoping that some fuel will reach the chambers of the gray matter and will ignite it to life – but the stubborn mind does not relent. This post is not about understanding the what led to the freeze, but about how we can begin thawing our thoughts via loving how to capture life.

Film

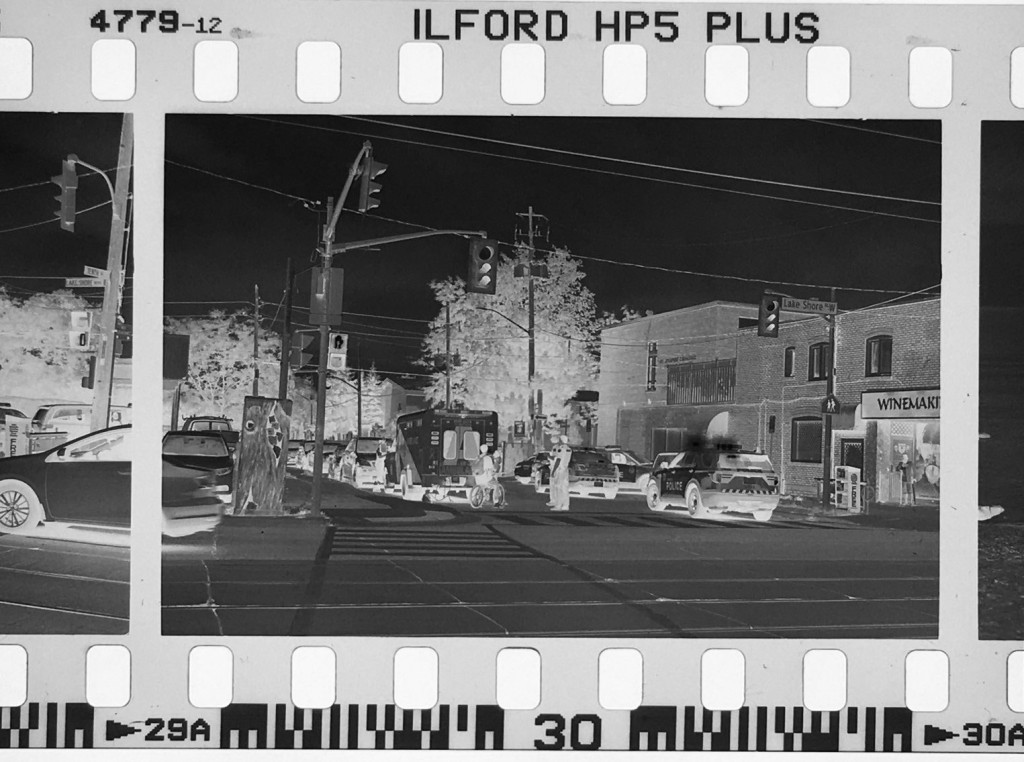

50% humans on the planet are now 3 seconds away from taking a picture. What used to be a involved and time consuming process that required knowledge of optics, chemistry, physics, “tricks” and an artistic passion for capturing life, is just second nature now. Getting bored and nothing else to do? Take out your phone, strike your favorite facial expression and boom! Selfie! The marvel of a ghostly image on a silver-plated copper sheet and then eventually a negative on a celluloid film is not awe-inspiring anymore. But a film has been a special invention. It was not instant gratification. It required some thought, some moment to reflect, some skill, anticipation and acknowledgement of a technological limit. I recently showed a few film negatives to some very young Instagrammers in a remote town in India and they found it hard to believe that it took days from the click to an actual photograph that you could see. And film is not that old. The situation of where film resides right now in the history of photography is somewhere at the intersection of hipsters, film school students, experimental artists, hobbyists and possibly some weird people like me who think film can heal your mind.

But indeed, it can.

Impermanence

The process of film capture and processing is not just click and chemicals. It is an appreciation of the marvel of the universe and understanding our insignificant role in it. Each film photograph is a cosmic event, a happenstance, a confluence of universe creating the right condition for allowing you to pause life in its tracks. For each film photograph you have seen, millions of photons reflected from the objects you see came directly or indirectly from the energy of the sun, which in-turn came from the womb of our Milky Way as part of a solar nebula. The silver-halide crystals on the film came from the same star-birth, trapped in the layers of earth until a human discovered that one can use light to darken it and other humans worked on perfecting the technology to freeze these them on their tracks in a celluloid film. These tiny silver grains, each with their own chemical story, a history of existence, now sitting comfortably, with varying degrees of darkness somehow making a picture. In life as well, situations exist in shades of gray. And whats more! It all happened with a “click”. Somehow a few electrons in a photographer’s mind fired, the hand moved and the gentle curtains inside the camera drew back as if welcoming these photons – the actors of life – on stage, with cheer and applause and let them in on the tiny theater inside the camera where the only person in attendance is you and the film. A film which impartially received it and stored it. The film does not judge. The film only captures. In this sense, a true photographer will not judge the photons, and thus will not judge who produced them, be it objects or humans or the circumstances in the theater of life that it captures. You will not judge what enters your mind as life plays out. Your role is limited. In cosmic scales, you are a mere “click”. All your worries, desires, lost and found loves, family, Taj Mahal, Himalayas, the suffering millions or the nefarious tech bros – all will perish just as the waves of time level the beach sand. Eventually, even the film fades away. The first lesson of film is the acceptance of duality – of impermanence in a seemingly permanent record.

Anticipation

The image from a film camera’s lens is not captured on film. It is captured first in the mind of a photographer via the viewfinder. In precisely a few microseconds before the click, the mind captures and develops the film, it even views it, anticipates and marvels at the fine shades and exposures – the color tone, the perfect metering and the marvelous job they must have done in selecting the shutter speed. Mind wonders what might happen when the photograph is finally developed – will it give me laurels and global appreciation? Will my grand-kids ever wonder what their grandfather was doing with a hammer, breaking a random wall in Berlin? Will the masses reading the newspaper tomorrow stand up and take action when they see the bleeding skull of an innocent boy while bombs fell and leveled his home? Will my fiancee remember this first scooter we bought together as she gets old? Will she even remember me by then? Each thought rattles the mind a tiny bit. As life flashes before your eyes when you die, the life of a photograph flashes before the photographer’s eyes as the moment of the click approaches. But the click is inevitable. Whatever the anxieties and anticipations that were built up to the click – the ones that all happened in microseconds – they are immaterial now. The film is the record of truth. It now has the tiny piece of life safely tucked in the darkness of the camera. What transpired is immaterial. What will be developed is the inevitable truth. You see, your emotions towards the film were not permanent. As the photograph develops, your response to it will change, your life and your anticipations will readjust. Yes, at the time of the click you had just one “shot” to fame, or maybe the one special moment in your life you wanted to hold on to. But now its gone. Its purpose is fulfilled. It’s time to roll the film and take another shot at life. A photographer has to internalize that while time in life is not infinite as the shots are just 36 in a roll, not every one of them will always amount to something. But they all will lead to the roll being development ready. The real story of life will be in the complete roll. Each shot is just a passing moment.

Desires

A photographer desires the flashiest of optics, the longest of the rolls, the films with dynamic speed ranges. Some might even dream of a 110 film with grain quality of a medium format, but the realities of film science and life come in the way. You got a simple Minolta with smudges on the lens? The light is bad, the film stock is a lousy 5 year old Kodak ColorPlus 200 with just 3 usable shots left? But you are parked at the side of an unnamed road, sipping tea with your most intimate friend. Hearts have been poured out, and time is short as parting nears. You can’t desire time to be stopped or the best golden hour light to shine and capture this bittersweet moment within this moment. Sometimes, all you need is to take out the Minolta and just take the shot. Without getting weary of anticipation, do understand that things in life will not be infinite, all resources, especially time, is limited. Capture it with whatever you have. Know that limited does not mean less. To capture a whole human life, even 36 shots are all that is needed. It’s just that value of each shot will increase. Similarly, to drive around, a 10 year old Mini will just do fine. You don’t need the Mustang Mach-E. You just need to understand the ephemeral nature of things and keep the least and the most valuable to you, close. For last 10 years, I have more or less lived out of two suitcases. I believe, the memories I have had are more value to me than things. Moments of joy, are actually worth more to you especially when they are few and far between. A film will give you those memories. It will teach you to limit the desires, it will show you why a single shot is important. Each shot, good or bad will receive the same care of chemicals until it develops and until you pause, ponder over the negatives and smile as you hold them across the light – readjusting your anticipations with a feeling of contentment in less possessions. Our desires will not end and the modern world teaches us to desire more, crave more. But the way of a film photographer is to hold each film in the hand, thank the cosmic providence for bringing you this raw material to stop time momentarily. And then roll in the cartridge, pause and take each shot with humility and thought.

Developing Clarity

Film needs love. As we talked before, it is not an instant process. Just as a dry seed can live hundreds of year in extreme dearth, pain and arid climate, but it only germinates when it gets the right conditions to trigger the germination gene, a film is not ready when you click. It lives in the darkness, only absorbing the random, brief moments of life when the photographer allows it to. Beyond that, it just exists. Passively. But only once its time has come, the film is ready for development. It is developed not just with chemicals, but with extreme care and love. It is developed with skill, with the correct time, temperature, the correct developer, stopper and fixer chemicals. Each film stock is different – so different chemicals and variables for black and white, color, ISO. Developing times are carefully tuned for the kind of “clarity” you need in a photograph. When the negative is transferred to a photo paper, it feels like the veil lifting from a chaotic mind. A mind also develops clarity when it is washed with the chemical of life experiences. You learn who is here to stay till the end and who will leave when the ground shakes. You learn how society works – the joys, sorrows and inequalities it contains. The selfless service of some saints and the absolute villainy of scoundrels. Just as in a black and white photograph, the complete image is formed in shades of gray. There are no absolutes. There is no point in achieving the ideal perfect state – life exists only degrees of imperfection. Each person’s mind is like a film. It is different, it will develop clarity when the conditions are right, the washing time is correct and the temperature is just perfect. In addition, one cannot force clarity when the time is not right. Sometimes the mind is restless, and rightfully so. Just as the shock of light in the dark chamber of the film camera leaves the film in awe, the exposure, however brief, takes time to take hold and make sense of it all. As on film, the mind reflects what happened in that instant. And without judgement, the photographer winds the roll forward. Once all shots are taken – only then is the film ready for development. It’s ready for the wash, the holy dip in the Ganges, the sweat of the bike ride on Mt Hamilton or the tears of recovery. They all are part of the chemical wash to get a clear image. Once again, a new roll is picked, once again film is ready, this time, traveling through eastern Sierra mountains and the deserts of Nevada – taking in new images, new experiences. This time, the photographer and the film are ready. They know the concept of impermanence, of anticipations and of desires. The light is now more sharp, the development time will be adjusted post exposure to get a different and new clarity in life.

Why Film?

Why does it matter? In view of the impermanence of it all – why capture on film? What is the point? I can capture 100 photographs in a few seconds on the iPhone.

But did you really?

I think smartphone is capturing moving life. A camera however inspires you to captures a story. Film photography is not a nostalgic hobby. In the 21st century, it is a cleansing ritual. Of course, in a material sense it may be an expression of art or an occupation in legacy film education or just someone gaining hipster creds. But for me it has been a process to pause and think. A ritual of getting chemicals, beakers, a Minolta, re-visiting childhood memories of our bathroom darkroom, of learning optics and photo chemistry. A ritual of reflection on thoughts that cross my mind as I click the photos. A joy of washing and developing something with love and effort and something tangible that my fingers appreciate. It allowed me to not just look at a photo and think about the moments with joy or sadness, but add a layer of distraction around the quality of the print, the execution of the development process, the research on optimizing fixer use, the careful observation of the minute silver crystals embedded in the film and constant worry if I might have overexposed that one shot I really cared about. It made me escape everything. I knew I needed a wash. And redoing film after 20 years gave me this chance. So, capture the photons that seem to matter at the moment. Capture them and process film to attain some clarity – clarity of acknowledging impermanence, of understanding futility of ever increasing human desires and managing anticipations. While life continues to exist irrespective of capturing a slice of time on a film, consider the process of capturing life as a part of life itself. It is the part that makes you a photographer. A part that allows you to pause and observe. It allows you to love, but love something unconstrained, unconditionally.

Where does this path lead?

Nowhere and everywhere. While everyone has their own path, for me, this path leads me to wonder about the dichotomy of romantic and the rational viewpoints regarding film and life. (suggested reading: Zen and the Art of Motorcycle Maintenance by Robert M Prisig). While film aesthetics are important to me and I’ve been obsessed with grains, scanning and photo processing now, I have also bathed in the romantic aspects of photography. I crave for understanding the camera machine as much as the emotions an event of taking a photo and the photo itself can produce. Mundane moments are special to me now. I write poems for each photograph that makes me think about it more (not kidding!). Kinda like a creative distraction itself. As I dived deep in film, I acquired a Fujifilm XT-30. I have been assessing film aesthetics in the film simulation mode and tuning the simulations. In my head, my XT-30 is a film camera. Mostly, I click pictures in ACROS film simulation by default and imagine that I just have 36 shots for one session. Each photo now counts. Each photo is personal and each one records a story in my mind as I click it. A tiny narrator in my head narrates my thoughts to me. I wait a while after I click, and only then view the images in peace – with Lucky Ali songs in the backdrop 🙂 Silly, for sure. But as I said, for me this is a ritual for learning how to live.